How to Make a Cheap* ARKit Home Robot

We'll show you how to assemble a fun little ARKit-driven home robot in a weekend.

Jason Laan

Here’s a short writeup of the steps involved in making a cheap AR home robot. “Cheap” might be a stretch since two iDevices are required. But if you happen to have an extra ARKit capable iPhone lying around, then the robot base will only set you back about $40.00 depending on availability.

Robot Case

We used the great “Romo” robotic base from Romotive. Simply plug your iphone into the robot, and you can control it with code.

Sadly the company has shut down, so the only way to get one is buying it used. There are a few on amazon right now: Amazon search for ‘Romotive’. I was lucky and found mine for $35.00. If anyone has a source for these let us know in the comments!

Since ARKit uses visual information from the camera to track the phone’s position, we built a wooden frame to lift the phone up so it can get a better view of the environment. You can probably survive by using the unmodified Romo base, but in areas of your home with few visual features, ARKit might have a hard time tracking.

Controlling the Robot

Before shutting down, Romotive kindly released all their source code for the robot. The repo has been forked and nicely maintained here https://github.com/fotiDim/Romo by Foti Dim. After downloading the SDK code, I started with the “HelloRMCore” xcode project. Follow the instructions in that github repo to create a new project using the Romo SDK.

ARKit and Navigation

With ARKit, we get amazing 3d position tracking of the robot. That alone is a pretty cool addition to iPhone-based bots. For example, the robot could navigate around your house while avoiding obstacles. Unfortunately the robot doesn’t know where in your house it is, only that it has moved 3.2 meters from where it started. This issue is overcome by localizing the robot within a known map. Several companies have sprung up to solve this exact problem — often referred to as the “AR Cloud”. You can read more about the AR Cloud here.

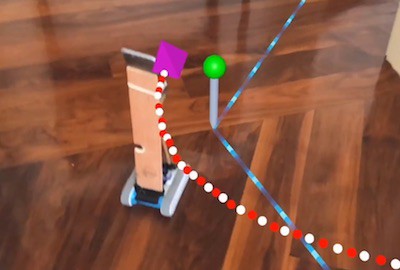

Using an AR Cloud SDK along with ARKit, the robot and other devices can all share a single coordinate system in real-time. What this means is the robot can understand where it is in your house, and communicate that position to other phones ( that also know their own position within this map.) Using AR, we can display the robot’s location, and even draw waypoints for the robot to navigate to.

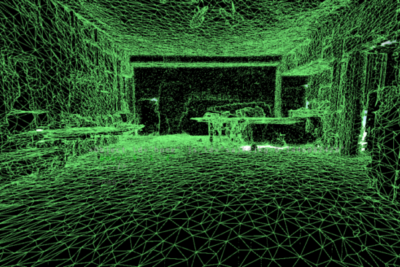

To localize the robot, we used the nice Placenote SDK from vertical.ai. The first step is to use the SDK to create a map of, say, your kitchen. Once a map is created, the phone on the robot can localize itself on that map, and communicate its’ position to other devices that have loaded the same map. “Map” might make you think of google maps where streets and houses are nicely labeled for human interpretation. But the map used to sync positions doesn’t contain any labels like “kitchen” or “wall here”. That’s not to say you can’t add those labels to the AR map yourself.

The final step is to communicate between the robot and the controller using WiFi. There are many off the shelf solutions for this, but we used the “Multi-peer connectivity framework” on iOS. There’s a nice tutorial with some sample code here: https://www.ralfebert.de/ios-examples/networking/multipeer-connectivity/

Finally, a little SceneKit code was used to display the waypoints and the robot’s path.

More to do

Although this was a quick ‘weekend hack project’, there is a ton of potential in combining cheap robot base with the ever improving technology in our cell phones. Better sensors, advances in SLAM, computer vision, and 5G all combine for lots of interesting use cases.

Here are a few ideas that are totally possible using this setup and some coding:

Basic obstacle avoidance

Using ARKit 3d feature points, create a voxel grid that the robot can use to plan paths while avoiding objects.

Automatic house floor plan creation

Let the robot navigate around freely all while creating a nice home floor plan complete with stitched panoramas.

Is the oven on?

Once we have a semantically labelled home floor plan complete with furniture and appliance locations, tell the robot to go to the oven and email back a photo ( or better yet classify ON/OFF using a neural network )

Face recognition + person following

Who is in your home? Where are they? Think nest doorbell but it can move around.

Pet tracking throughout the day

Set the robot to track your pet throughout the day and create a 3d path history — where does that cat go while I’m at work?

Video telepresence + 2d home map

See what the robot sees, but navigate your home by tapping on a 2d floor plan view to change rooms.

The code for the app is here: https://github.com/laanlabs/HomeRobot , but it’s a work in progress, so let us know if you run into any problems setting it up.